|

Hi there! I am a PhD candidate Previously, I was an undergraduate student in the Department of Electrical and Electronics Engineering at Bilkent University, where I worked in Imaging and Computational Neuroscience Laboratory (ICON Lab) in National Magnetic Resonance Research Center under the supervision of Prof. Tolga Çukur with a focus on deep learning for accelerated MRI synthesis and reconstruction. I am currently looking for full-time research scientist/engineer positions starting Summer 2026. Please feel free to reach out if you have any opportunities! |

|

|

|

I am currently passionate about post-training strategies to improve visual reasoning in Multimodal Large Language Models (MLLMs), with a focus on enhancing how models process and reason over complex visual data. A central goal of my work is to address limitations such as the lack of mental visualization, which we revealed in our recent benchmark Hyperphantasia (NeurIPS 2025). Previously, my research spanned mechanistic interpretability, inverse problems, large language models, and learning theory, approaching machine learning problems from both practical and theoretical perspectives. Using sparse autoencoders (SAEs), I studied how human-interpretable concepts emerge and evolve across reverse diffusion steps in diffusion models (NeurIPS Spotlight 2025). I developed diffusion-based methods for inverse problems—including denoising and deblurring—in our works Adapt-and-Diffuse (ICML Spotlight 2024) and DiracDiffusion (ICML 2024). I also worked on a transformer–convolution hybrid architecture achieving state-of-the-art performance on the fastMRI dataset: HUMUS-Net (NeurIPS 2022). On the theory side, I analyzed the gradient descent dynamics of learning linear target functions with shallow ReLU networks (in submission). I also have extensive experience working with large language models (LLMs), including fine-tuning, continual pretraining, post-training, prompting, and evaluation. During my 2024 internship at Amazon, I worked on knowledge injection into LLMs through continual pretraining with DoRA adapters and retrieval-augmented generation. In my 2025 Amazon internship, I developed a novel approach for extracting use-case–adaptive embeddings from LLM hidden states for downstream applications. I have additionally explored improving mathematical reasoning in LLMs via self-feedback and self-revision loops, without relying on external verifiers. Selected papers are shown below. |

|

Emergence and Evolution of Interpretable Concepts in Diffusion Models

Berk Tinaz*, Zalan Fabian*, Mahdi Soltanolkotabi (* denote equal contribution) NeurIPS (Spotlight), 2025 CVPR VisCon (Spotlight + Best paper honorable mention) and GMCV Workshops, 2025 GitHub / Paper Link Using sparse autoencoders (SAEs), we investigate how human-interpretable concepts evolve in diffusion models through the generative process. Furthermore, we demonstrate that these concepts can be manipulated to steer image generation. |

|

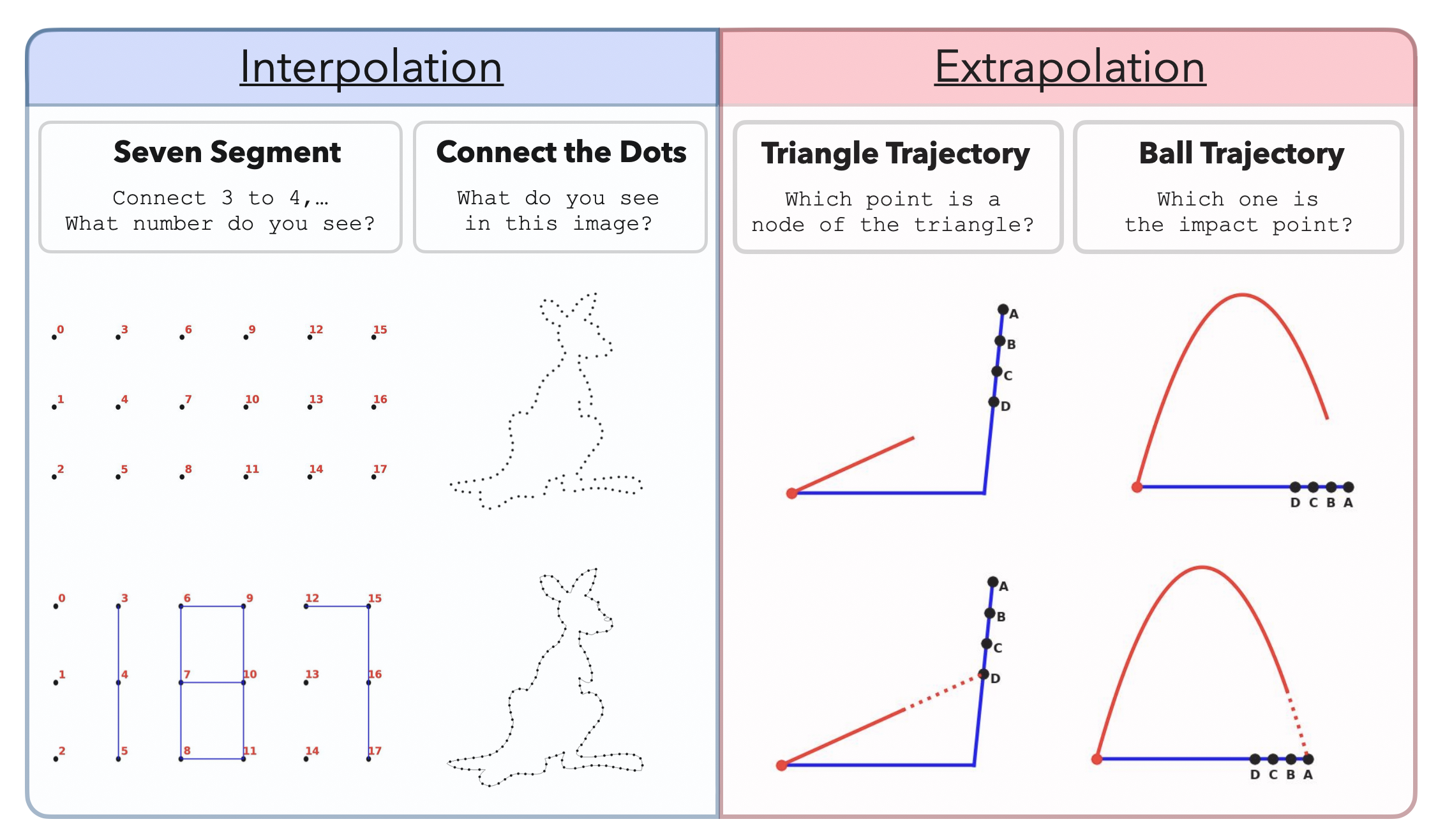

Hyperphantasia: A Benchmark for Evaluating the Mental Visualization Capabilities of Multimodal LLMs

Mohammad Shahab Sepehri, Berk Tinaz, Zalan Fabian, Mahdi Soltanolkotabi NeurIPS, 2025 GitHub / Paper Link We introduce Hyperphantasia, a benchmark to evaluate the mental visualization capabilities of multimodal large language models (MLLMs). We demonstrate a substantial gap between the performance of humans and state-of-the-art MLLMs. |

|

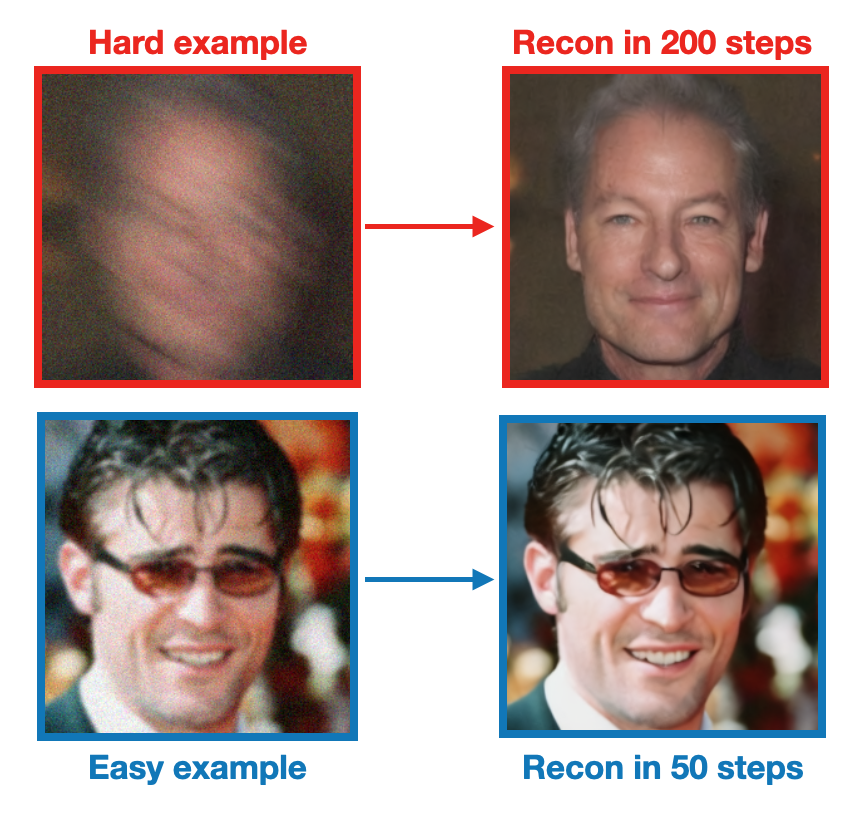

Adapt and Diffuse: Sample-adaptive Reconstruction

via Latent Diffusion Models

Zalan Fabian*, Berk Tinaz*, Mahdi Soltanolkotabi (* denote equal contribution) ICML (Spotlight), 2024 NeurIPS Deep Inverse Workshop, 2023 GitHub / Paper Link Latent diffusion based reconstruction of degraded images by estimating the severity of degradation and initiating the reverse diffusion sampling accordingly to achieve sample-adaptive inference times. |

|

DiracDiffusion: Denoising and Incremental

Reconstruction with Assured Data-Consistency

Zalan Fabian, Berk Tinaz, Mahdi Soltanolkotabi, ICML, 2024 GitHub / Paper Link Novel framework for solving inverse problems that maintains consistency with the original measurement throughout the reverse process and allows for great flexibility in trading off perceptual quality for improved distortion metrics and sampling speedup via early-stopping. |

|

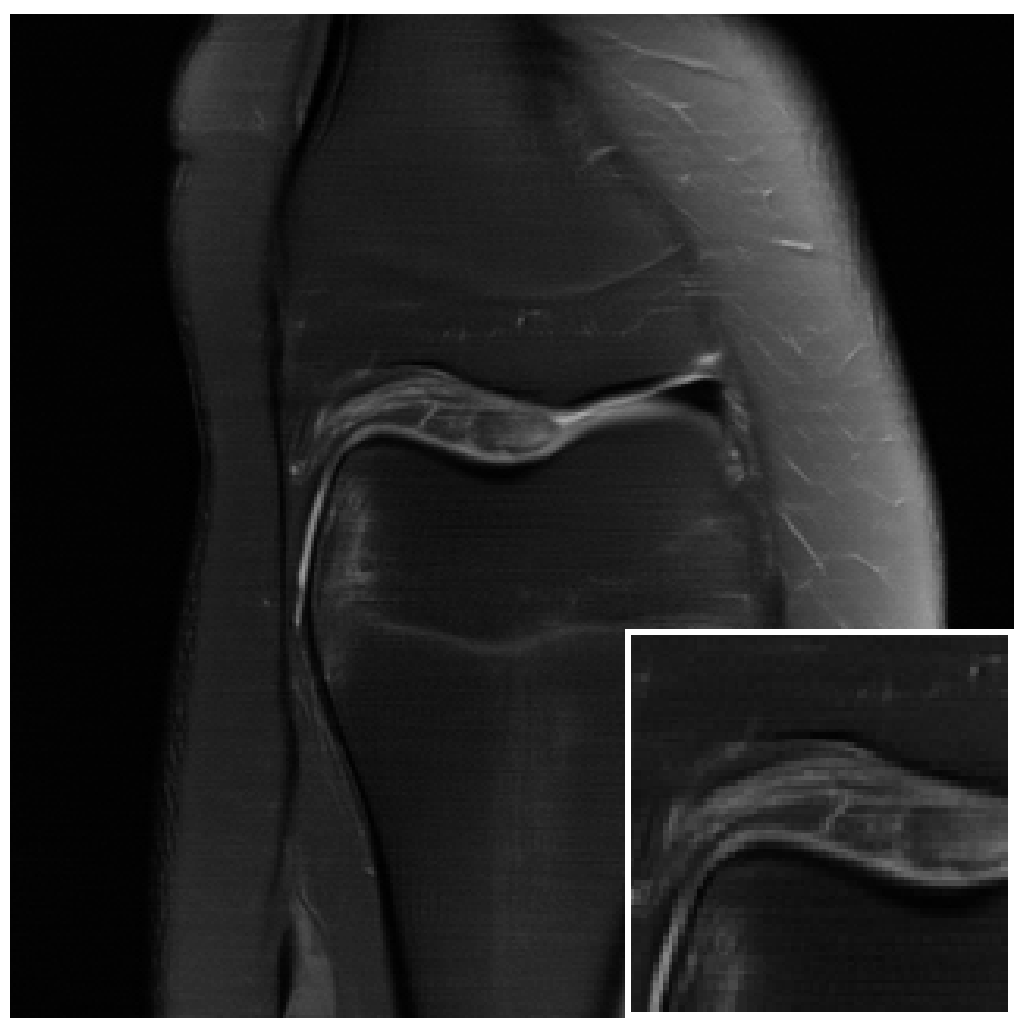

HUMUS-Net: Hybrid Unrolled Multi-scale Network

Architecture for Accelerated MRI Reconstruction

Zalan Fabian, Berk Tinaz, Mahdi Soltanolkotabi, NeurIPS, 2022 GitHub / Paper Link A hybrid architecture that combines the implicit bias and efficiency of conbolutions with the power of Transformer blocks in an unrolled and multi-scale network to establish SOTA on fastMRI dataset. |

|

Website template is proudly taken from Jon Barron (source code). |